By Kalte Sonne

By Frank Bosse

Anup-to-date Article describes the performance of upcoming models, made possible by improving of computing power compared to previous high-performance computers:

the “Exaflop” generation, i.e., 1 exaflop = 10 to the power of 18 = 1 trillion floating-point operations per second. They should then also make local climate calculations possible, primarily by a narrower lattice and deposited physics where today must be parameterized. This is a dream of the future, much more interesting are the Statements about the previous models in the article. Tim Palmer, Professor from Oxford puts it this way:

“A highly nonlinear system, where distortions occur that are greater than the signals you’re trying to predict, is really a recipe for unreliability.”

Many laws and physical equations of the Climate systems are known, but so far it has not been possible to for computation time reasons. Björn Stevens from the Hamburg Max- Planck Institute for Meteorology (MPI-M) puts it this way:

“We were somehow forbidden to use this understanding by the limits of computation.”

And further:

“People sometimes forget how far some of the fundamental processes in our existing models are from our physical understanding.”

This does not refer to only local phenomena. Stevens describes it this way:

“If we finally succeed in physically describing the pattern of atmospheric deep convection over the warm tropical seas in models, we will be able to understand more deeply how this then forms large waves in the atmosphere, directs the winds and influences the extratropics.”

We hear again and again that the models “the physics” image. They obviously do not, even with large-scale phenomena. These are very frank words about the current models. They shine in the light of what may one day be possible, rather crude statistical Aids as images of reality. This is how the article on End the goal of modeling using “exascale computing”. It is intended to be a real image of the real terrestrial climate system, a “digital twin” arise. What we have today is characterized by Bjorn Stevens as follows:

“… that world policymakers need to rely on climate models as much as farmers rely on weather reports, but this change requires a concerted – and expensive – effort to create some sort of common infrastructure for climate models.”

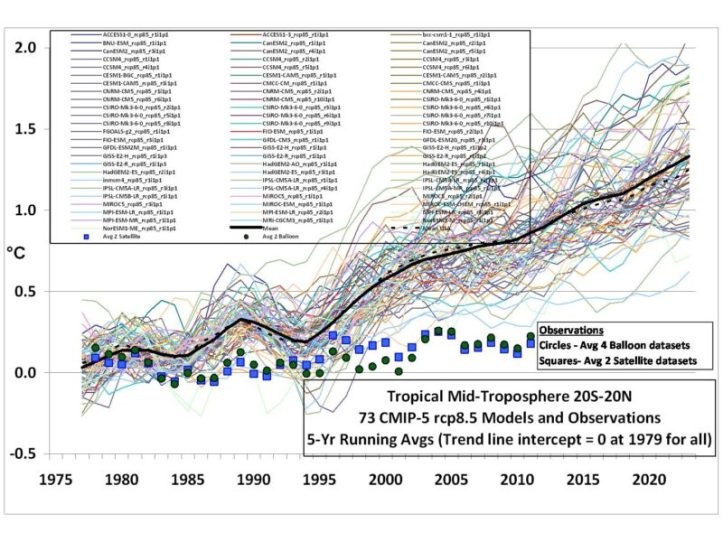

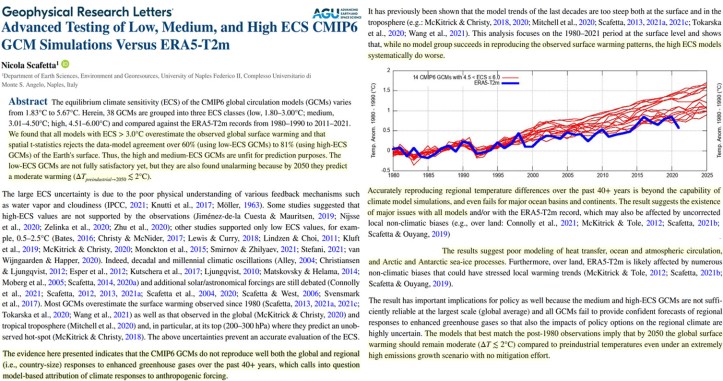

What have we not been told about the performance of Climate models! And now it turns out that they have not yet been in are able to reliably inform “the world’s decision-makers”. In truth, they are far from it. In this context, it is also understandable that the last status report of the IPCC for the first time DID NOT use the many models that were created especially for it, called CMIP6 family. Rather, the IPCC obtained the most important core information: “How is our climate system sensitive to CO2 increase?” from apaper, which merged different estimates without model use.

This one work unfortunately contained some shortcomings and was able to also be updated, Lewis (2022) reduced the most likely value assumed by the IPCC AR6 by more than 30%.

So, in climate science, a lot is in flux and (as is always customary in science) nothing set in stone. Whether the at some point also silent in our media? Or to the frightened who think they are certainly the “last generation” before climate collapse, informed by these same media and how they pretend: “The science”? Or was it instead of science such works as “Hothouse” Earth” and “Climate-Endgame“?

Let us remain optimists!

You must be logged in to post a comment.